GS3DS

Accerlated 3D segmentation using Gaussian Splatting

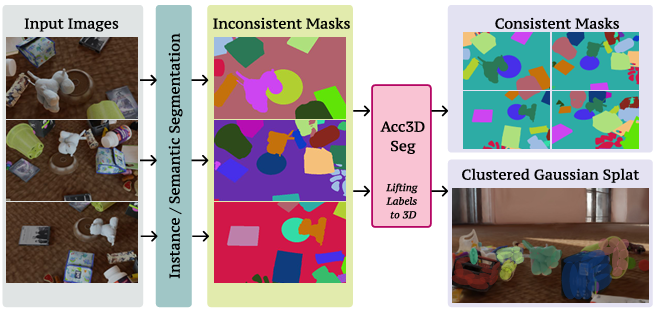

We introduce GS3DS, a method takes a set of multi-view RGB images as input, and passes these images through a pre-trained instance/semantic segmentation method. Although accurate, segmentation methods for 2D images do not generate multi-view consistent segmentation masks. Observe how labels vary across the images. Our method formulates this as a clustering problem in 3D, utilizing feature-vectors in 3D space which are trained using contrastive losses. Our method generates consistent segmentation masks.

Abstract

Recent breakthroughs in novel-view synthesis, particularly with Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS), have given rise to research focusing on lifting 2D segmentation labels to these 3D representations for solving instance/semantic segmentation in 3D. A significant challenge in lifting 2D instance labels to 3D is that they often lack multi-view consistency. Simply lifting these labels will lead to inferior 3D label predictions, as they may not accurately reflect the object’s appearance from different viewpoints. Existing lifting methods resolve this up to some extent but often rely on a costly two-stage approach, wherein the first stage they optimize 3D embeddings and in the second stage these embeddings are clustered. In this work, we propose a novel framework to effectively integrate the two steps in a single step, significantly reducing training time and providing superior 3D segmentation performance. Specifically, we perform organization and clustering in a single stage by decoding embeddings into instance labels during the optimization of 3D representation itself. Further, we binarize the learned embeddings to extract the instance labels for faster decoding. Notably, unlike previous approaches, our method does not need information related to the number of objects in the scene and eliminates the need of offline clustering. This object-agnostic and efficient clustering approach makes our method well-suited for real-world scenarios with varying object counts. To demonstrate the effictiveness of our method on challenging and complex scenes from ScanNet, Replica3D and Messy-Rooms.

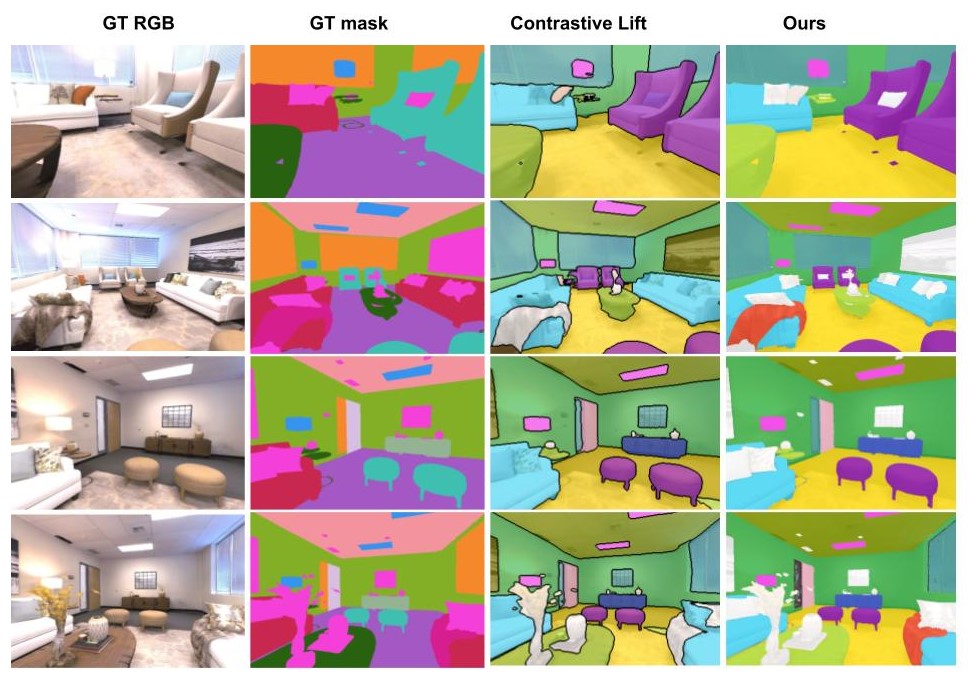

Results