Factchecking in SLM

Refining Factual Verification with via Curriculum-Inspired and Reinforcement-Guided Optimization

Note: I kept this explanation high-level, intended to illustrate how the model can be improved in challenging scenarios. If you’re interested in the detailed implementation or research behind it, reach out to us at AutoAlign AI.

Summary

Factual verification plays a critical role in combating fake news circulating on the internet and mitigating hallucinations generated by language models. However, using proprietary large models for this task introduces privacy concerns and incurs significant operational costs. Running a 600B parameter model locally is impractical due to its resource intensity.

Small Language Models (SLMs), in contrast, are lightweight enough to run on local infrastructure. Yet, they typically lack the reasoning capabilities and contextual understanding required for complex or long-form inputs. To bridge this gap, we fine-tuned SLM models. Although smaller models struggle with long-context understanding, we tackled this limitation by first training the model on long-context datasets and then fine-tuning it specifically for factual verification tasks. This two-stage approach significantly improved the model’s performance—beating both open-source and closed-source LLMs across multiple benchmark datasets.

Challenges

Despite the importance of fact-checking, high-quality datasets in this domain are limited. Existing datasets are often biased towards short to medium-length contexts, typically ranging from a few hundred to a thousand tokens. However, real-world scenarios—particularly in enterprise applications—require the model to handle significantly longer contexts (up to 32k tokens). Training on short-context data alone doesn’t prepare models to reason over lengthy documents, rendering them ineffective for production use.

Moreover, accurate reasoning over large and noisy document collections—such as enterprise knowledge bases or internet-scale corpora—is inherently difficult. The structure of these documents is often complex, and extracting relevant information for verification can be non-trivial.

Off-the-shelf SLMs struggle in such environments, frequently producing hallucinated answers or incorrect figures. Enterprises attempt to mitigate these failures through prompt engineering, but this is a reactive and unsustainable approach. Constantly tweaking prompts is labor-intensive, brittle, and fails to scale in production environments.

Solution

A robust solution requires both architectural and training improvements. Given the scarcity of fact-checking data, we adopted a curriculum learning strategy, where the model is progressively trained on tasks of increasing difficulty and complexity. We first trained the model on large-scale reasoning and comprehension datasets to strengthen its contextual understanding, then fine-tuned it on domain-specific factual verification data

How can we align gradients in curriculum and fine-tuning stages?

A key challenge in combining curriculum learning with domain-specific fine-tuning is ensuring that the learning signals from both stages are aligned—misalignment can lead to forgetting or degraded performance. To address this, we structure training to first build general reasoning abilities through broad tasks, then gradually shift to targeted fact-checking. Throughout, we apply high-level strategies to ensure knowledge is transferred smoothly and reinforced across stages, enabling the model to improve without losing previously learned capabilities

Our Approach

To operationalize this strategy, we created a pipeline of three curated datasets:

1. Gradient-Aligned Curriculum Dataset

A diverse collection of reasoning and comprehension tasks sorted by difficulty. This dataset helps the model acquire foundational skills for long-context understanding in a stable and generalizable way.

2. SFT - Fact-Checking Dataset

A supervised dataset containing fact-checking problems with reasoning traces.We used a mix of easy and moderately difficult examples here. Samples were manually inspected for quality, structure, and reasoning depth.

3. RL - Fact-Checking Dataset

A reinforcement learning dataset composed exclusively of hard cases that require multi-step reasoning or retrieval from large documents. This stage pushes the model beyond typical LLM capabilities and drives generalization.

To ensure data quality:

- We applied multi-level filtering based on difficulty, diversity, and formatting quality.

- Only datasets requiring substantial reasoning were retained.

- Poorly formatted or overly simplistic examples were discarded

Creating reasoning traces for difficult problems is especially challenging. In our evaluation, even closed models failed to generate correct traces in over 80% of hard examples. Therefore, we manually curated traces for easier and moderately difficult problems to support SFT. For RL training, we used only the most challenging examples, skipping the need for predefined reasoning traces and letting the model learn through exploration and reward optimization. This not only improves performance but also reduces the overhead of manual trace generation. 😉

Training

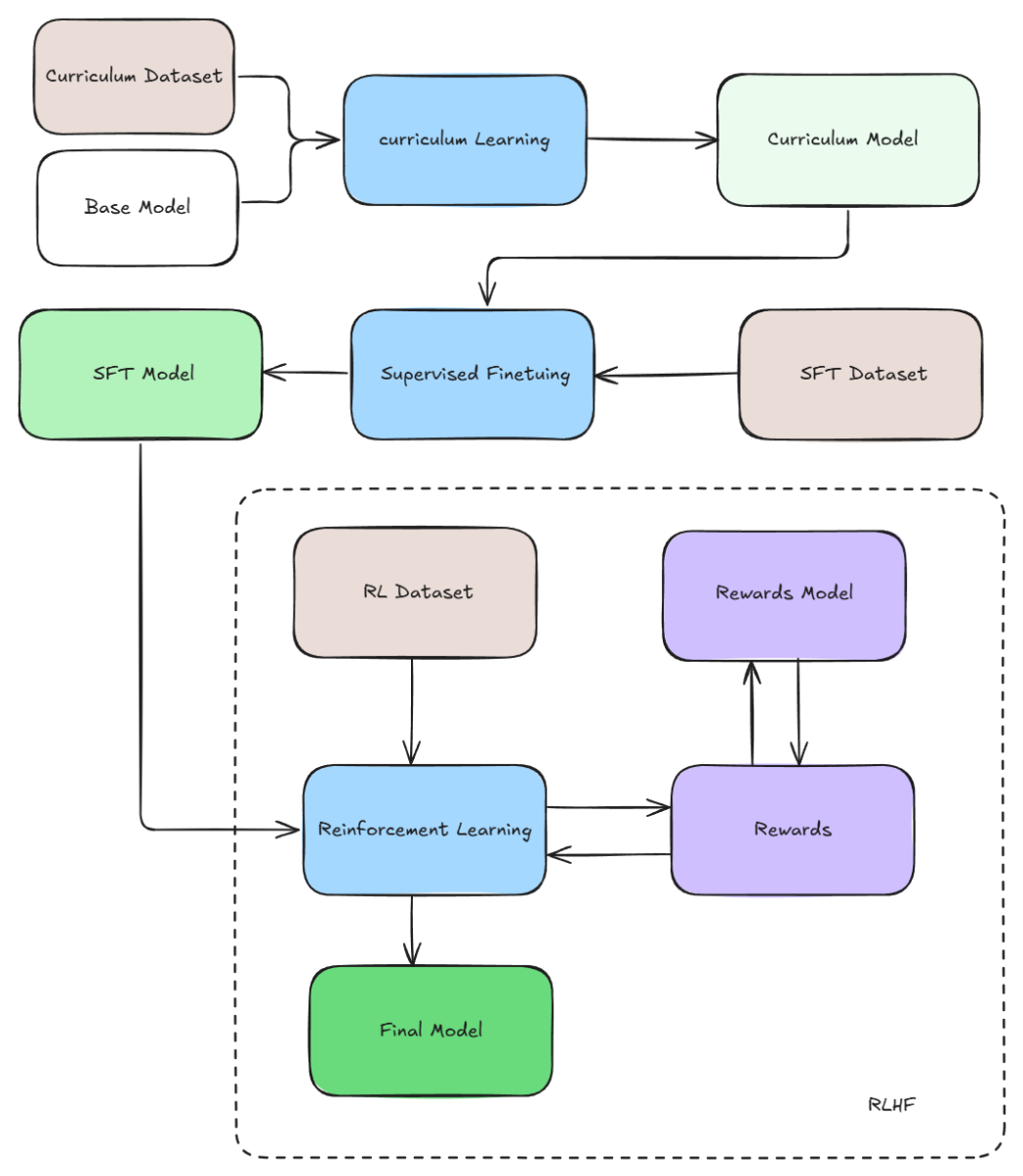

The training pipeline follows a multi-stage approach to progressively align the model with task objectives and human preferences. The stages are:

- Curriculum Learning We begin by training the Base Model on a Curriculum Dataset, where data is structured from simple to complex examples. This strategy, known as Curriculum Learning, helps the model build foundational capabilities before tackling more nuanced tasks.

- Input: Curriculum Dataset

- Output: Curriculum Model This stage improves convergence and boosts performance by presenting learning challenges in an optimized order.

- Supervised Fine-Tuning (SFT) Next, the Curriculum Model is fine-tuned using a more task-specific SFT Dataset. This is a standard Supervised Fine-Tuning process, where the model learns to follow instructions or generate desired outputs based on labeled input-output pairs.

- Input: SFT Dataset

- Output: SFT Model This step ensures the model understands the specific domain or task it’s being adapted to.

- Reinforcement Learning from Human Feedback (RLHF) In the final phase, the SFT Model is further aligned using Reinforcement Learning, guided by human preferences:

- A Rewards Model is trained to assign preference scores to model outputs.

- This model scores responses generated from the RL Dataset.

- Using these rewards, the system applies Reinforcement Learning (typically GRPO) to adjust the model parameters.

- Inputs: RL Dataset, Rewards Model

- Output: Final Model

Optimizations in Training

The main bottleneck in our pipeline is the reinforcement learning (RL) stage, especially when using reasoning-heavy tasks and GRPO (Gradient-based Policy Optimization). On standard hardware like L4 GPUs, fine-tuning can take several hours to days. To accelerate this process, we introduced key optimizations in how GRPO training is handled.

Common Practice (and Its Limitations)

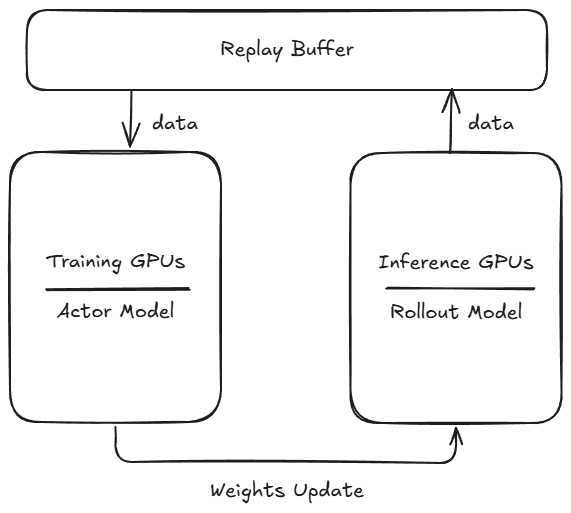

Typically, RL training is split across different GPU roles:

- A subset of GPUs is reserved for inference using fast open-source libraries like vLLM or SGLang.

- The actor model is loaded onto the remaining GPUs for training, and the rollout model gets updated by syncing weights after each step.

However, this setup is inefficient:

- Inference GPUs are underutilized due to limited parallelism and caching.

- The actor model, loaded on fewer GPUs, consumes more memory per GPU—forcing smaller batch sizes and slowing down training significantly.

Our Optimized Approach

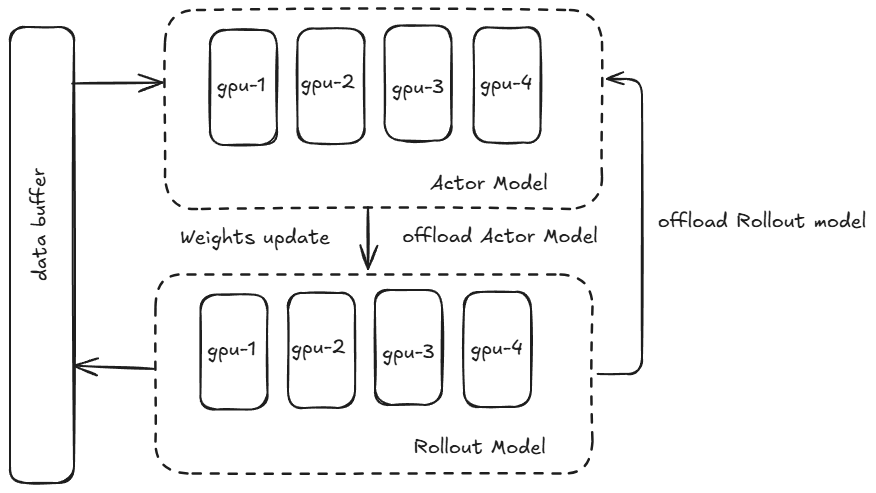

To overcome these issues, we used different setup to maximize GPU utilization (inspired from ms-swift colocate):

- All GPUs are used for both training and inference.

- We offload the rollout model to CPU during training steps (weight updates).

- We offload the actor model to CPU during inference steps (rollouts).

By dynamically offloading models and sharing all GPUs across both phases, we reduce memory pressure, enable larger batch sizes, and significantly increase parallelism. This optimization cuts training time from 20 hours down to just 9 hours, making RL fine-tuning far more practical on limited hardware.

Acknowledge

This work is inspired by Deepseek-R1 ,Lamma and S1. Its implementation is built upon veRL and ms-swift. We sincerely appreciate the efforts of these teams for their contributions to open-source research and development.

- https://arxiv.org/pdf/2501.12948

- https://arxiv.org/html/2501.19393v1

- https://github.com/volcengine/verl

- https://github.com/modelscope/ms-swift/tree/main

I’d also like to thank AutoAlign AI and the incredible team at AutoAlign AI for making this project possible.

For any further details or collaboration inquiries, feel free to reach out to AutoAlign AI .